Bayesian Filtering for Audio Denoising

1.1 Problem Statement and Motivation

Audio signals often contain unwanted noise from recording environments, electronic circuitry, or other external factors. This noise can obscure critical details, affecting applications like speech enhancement, music production, or field recordings. Audio denoising is therefore a key step in improving signal quality.

Classical methods, like frequency-domain Wiener filtering, may struggle with time-varying signal characteristics or non-stationary noise. In contrast, probabilistic state-space models provide a more flexible framework by capturing temporal dynamics and handling uncertainty. By leveraging these models, we can integrate knowledge about signal behavior into the denoising process.

In this notebook, we focus on offline audio denoising, where the entire audio recording is available beforehand. This allows us to apply a Kalman smoother, which uses both past and future observations to estimate the clean signal. Additionally, we use Bayesian parameter estimation to infer noise characteristics directly from the data.

1.2 Overview of the Approach

Our approach combines the state-space model perspective with the Kalman filter/smoother, using RxInfer.jl for probabilistic modeling and inference:

State-space modeling:

- The clean audio signal is modeled as a hidden (latent) process evolving over time.

- The observed noisy audio is treated as measurements corrupted by noise.

Kalman Filter and Smoother:

- The Kalman filter estimates the latent signal using past and current observations.

- The Kalman smoother refines these estimates using future observations for higher-quality results.

RxInfer integration:

- Instead of manually implementing Kalman equations, we use RxInfer to define the probabilistic model.

- RxInfer handles inference, including posterior estimation of the clean signal.

This pipeline effectively combines probabilistic modeling with temporal dynamics for robust audio denoising. In the following sections, we’ll prepare the data, implement the RxInfer model, visualize results, and explore extensions for more complex scenarios.

2. A Simple Example

2.1 Simple Sinusoidal Signal Dataset

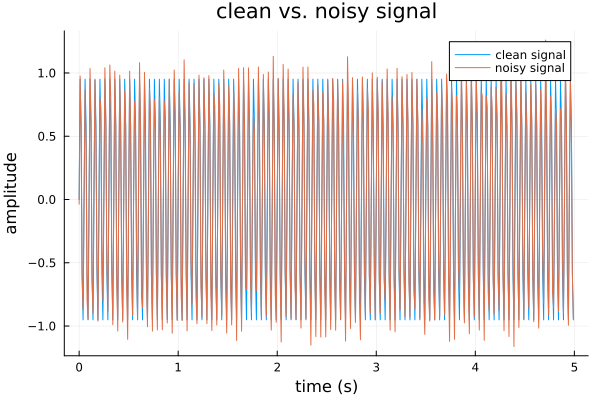

For illustrative purposes and to start simple, we’ll generate a pure sine wave at a specific frequency and length, then add noise to simulate a “noisy audio” signal. This will allow us to clearly see and measure the amount of noise introduced, and to easily verify whether our denoising method is working.

2.2 Data Generation

To start, we generate a synthetic sine wave and add Gaussian noise to simulate a noisy signal. The following plot below shows the clean and noisy signals for comparison.

Parameters

- Sampling rate: for example 100 Hz (just to mimic an audio-like environment without large arrays)

- Duration: a short signal, say 5 seconds

- Frequency: a single tone (within humanly audible range, and still readable in a plot), like 20 Hz

- Amplitude: we can set the amplitude to 1.0 for simplicity

Noisy signal creation

- We’ll generate a time vector $t$ from 0 to 2 seconds at the chosen sampling rate

- Compute a sine wave:

$$ x(t) = \sin(2\pi f t) $$

- Add Gaussian noise (or another noise type) to produce our observed signal $$ y(t) = x(t) + \varepsilon $$

using Pkg; Pkg.activate(".")

using Plots

using Random

# parameters

fs = 100 # sampling rate

duration = 5 # seconds

f = 20 # frequency in Hz

amplitude = 1.0 # amplitude

noise_std = 0.1 # noise standard deviation

# time vector

t = 0:1/fs:(duration - 1/fs)

# clean sine wave

x = amplitude * sin.(2 * π * f .* t)

# add Gaussian noise

Random.seed!(42) # for reproducibility

noise = noise_std .* randn(length(t))

y_meas = x .+ noise

# plot the signals

# plot the signals

plot(

t, x,

label = "clean signal",

xlabel = "time (s)",

ylabel = "amplitude",

title = "clean vs. noisy signal",

legend = :topright

)

plot!(

t, y_meas,

label = "noisy signal"

)

3. Theoretical Background

Here we introduce the state-space formulation of a linear dynamical system and explain how the Kalman filter and Kalman smoother operate within this framework. For readers interested in a more detailed mathematical explanation, please refer to my other blog post on the Kalman Filter.

3.1 State-Space Representation

A state-space model expresses an observed time-series $\mathbf{y}_t$ in terms of a latent state $\mathbf{x}_t$ that evolves over time. In its simplest linear form:

\[ x_{t+1} = \mathbf{F} x_{t} + w_t, \quad w_t \sim \mathcal{N}(0, Q), \] \[ y_{t} = \mathbf{H} x_{t} + v_t, \quad v_t \sim \mathcal{N}(0, R), \]

where:

- $x_t$: hidden state at time $t$

- $y_t$: observed measurement at time $t$

- $\mathbf{F}$: state transition matrix

- $\mathbf{H}$: observation matrix

- $w_t, v_t$: Gaussian noise terms with covariances $Q$ and $R$

For audio denoising, this simplifies to a 1-D case where:

- $F = 1$: assumes a random walk or near-identity transition

- $H = 1$: the measurement equals the state plus noise

This framework captures the hidden clean signal $x_t$, corrupted by noise $v_t$ in the observed $y_t$.

3.2 The Kalman Filter (Forward Pass)

The Kalman filter estimates the latent state $x_t$ sequentially, using two steps at each time $t$:

1. Prediction:

The current state is predicted based on the previous state using the system dynamics, with an estimate of the state uncertainty.

2. Update:

Once the next observation is available, the prediction is refined by balancing the model’s uncertainty against the uncertainty in the new measurement. This is governed by the Kalman gain, which determines how much weight to place on the observation versus the prediction.

The Kalman filter operates sequentially, meaning it processes data in real-time as observations are received.

3.3 The Kalman Smoother (Forward + Backward Pass)

When all observations are available (i.e., offline processing), the Kalman smoother refines each state estimate by incorporating information from both past and future observations. This process involves:

- Forward Pass: Similar to the Kalman filter, producing estimates based on past observations.

- Backward Pass: Refines these estimates by incorporating future observations, improving accuracy for offline applications like audio denoising.

The smoother is particularly useful for signals with temporal correlations, as it leverages the entire dataset to produce a more accurate reconstruction of the clean signal.

This section provides a high-level overview of how the Kalman filter and smoother operate. For detailed mathematical derivations and step-by-step explanations, refer to my other blog post.

3.4 Relation to AR/ARMA Models

An AR($p$) model: $$ y_t = \sum_{i=1}^{p} \phi_i y_{t-i} + \epsilon_t, \quad \epsilon_t \sim \mathcal{N}(0, \sigma^2), $$

can be transformed into a state-space form by defining a vector of past values as the state:

\[ x_t = \begin{bmatrix} y_t \\ y_{t-1} \\ \vdots \\ y_{t-p+1} \end{bmatrix}, \]

This highlights the generality of the state-space framework, unifying AR models and Kalman filtering.

3.5 Putting It All Together

- Hidden state: the clean signal $x_t$

- Observation: the noisy signal $y_t$

- Filtering: quick forward-time estimates

- Smoothing: refined estimates using all data

This setup is well-suited for audio denoising, leveraging temporal correlations for robust reconstruction.

4. Implementation with RxInfer (the Fun Stuff)

In this section, we translate our simple state-space denoising model into RxInfer.jl’s user-friendly model notation and run the Kalman smoothing procedure.

4.1 Simple Case: $\mathbf{Q}$ and $\mathbf{R}$ Are Known

Recall the 1-D state-space model:

\[ x_{t+1} = \mathbf{F} x_{t} + w_t, \quad w_t \sim \mathcal{N}(0, Q), \] \[ y_{t} = \mathbf{H} x_{t} + v_t, \quad v_t \sim \mathcal{N}(0, R), \]

Here, we assume $\mathbf{Q}$ and $\mathbf{R}$ are given constants (e.g., we know the noise variance). This simplifies the model by removing the need to estimate the noise characteristics.

4.2 Defining the Model

We define a minimal RxInfer model as follows:

using RxInfer

@model function simple_denoising_model(y, Q, R)

x[1] ~ Normal(mean = 0 , variance = Q)

y[1] ~ Normal(mean = x[1], variance = R)

for t in 2:length(y) # we can use length(y) because we already have data associated with y

x[t] ~ Normal(mean = x[t-1], variance = Q)

y[t] ~ Normal(mean = x[t] , variance = R)

end

end

The run_audio_smoother function defines the inference engine, which runs the Kalman smoothing process using the simple_denoising_model. Here, we specify the known values of $Q$ and $R$, along with the observed noisy signal $y$.

# define the smoother engine

function run_audio_smoother(y_data; Q, R)

# run the smoother

result = infer(

model = simple_denoising_model(Q = Q, R = R),

data = (y = y_data,),

options = (limit_stack_depth = 500, )

)

return result

end

run_audio_smoother (generic function with 1 method)

Next, we fix the values of $Q$ and $R$ to represent the process and observation noise variances, respectively. Using the run_audio_smoother function, we compute the posterior means and standard deviations of the latent state $x_t$, which represent the denoised signal and its uncertainty. From these, we calculate the uncertainty bands.

# fix Q and R

Q = 10

R = 1e-1

# run the smoother

result = run_audio_smoother(y_meas; Q = Q, R = R)

# extract posterior means and standard deviations

x_means = mean.(result.posteriors[:x])

x_stds = std.(result.posteriors[:x])

# compute uncertainty bands

x_upper = x_means .+ x_stds

x_lower = x_means .- x_stds

;

Finally, we visualize the results by plotting the clean sine wave, the noisy signal, and the denoised signal inferred by the Kalman smoother. Additionally, we include the uncertainty bands around the denoised signal to showcase the model’s confidence in its estimates.

# plot the clean sine wave

plot(

t, x,

label = "clean sine signal",

xlabel = "time (s)",

ylabel = "amplitude",

title = "noisy vs. clean sine wave",

legend = :topleft

)

# add the noisy signal

plot!(

t, y_meas,

label = "noisy signal"

)

# add the denoised signal

plot!(

t, x_means,

label = "denoised signal",

linestyle = :dash

)

# add the uncertainty ribbon

plot!(

t, x_means,

ribbon = (x_means .- x_lower, x_upper .- x_means),

label = "uncertainty",

color = :blue,

alpha = 0.2

)

In the plot above, we see the denoising results from the Kalman smoother using the simple model with fixed noise covariances ($ Q $ and $ R $):

- Clean Sine Signal: The ground truth waveform that serves as our benchmark.

- Noisy Signal: The sine wave corrupted with Gaussian noise, which simulates real-world noisy observations.

- Denoised Signal: The Kalman smoother’s reconstruction of the clean signal, based on the given $ Q $ and $ R $.

- Uncertainty: A shaded region representing the uncertainty in the inferred signal, derived from the posterior variance of the latent state $ x_t $.

Key observations:

- The denoised signal closely follows the clean sine wave, effectively reducing the noise and preserving the underlying waveform’s structure.

- The uncertainty bands are narrow, reflecting the confidence of the model in its reconstruction. This is expected since the noise covariances were fixed and known beforehand, allowing the model to focus solely on estimating the signal.

This plot demonstrates the effectiveness of the Kalman smoother in recovering clean signals when the noise characteristics are well-defined.

4.3 Introducing Uncertainty in Noise Covariances $ Q $ and $ R $

In the real world, the exact noise characteristics of a system are rarely known. To address this, we extend the previous model by treating the process noise covariance $ Q $ and the observation noise covariance $ R $ as uncertain parameters with prior distributions. This approach allows us to infer $ Q $ and $ R $ directly from the data, making the model more flexible and robust.

We use Inverse Gamma priors for both $ Q $ and $ R $, which are commonly used for variance parameters in Bayesian statistics. The updated model retains the same structure for the latent state $ x_t $ and observed signal $ y_t $, but now incorporates these priors:

\[ Q \sim \mathcal{IG}(\alpha_Q, \beta_Q), \] \[ R \sim \mathcal{IG}(\alpha_R, \beta_R), \] \[ x_1 \sim \mathcal{N}(0, Q), \] \[ y_1 \sim \mathcal{N}(x_1, R), \] \[ x_{t+1} \sim \mathcal{N}(x_t, Q), \] \[ y_t \sim \mathcal{N}(x_t, R). \]

This enhancement enables the model to handle situations where the noise levels are unknown or vary across datasets.

@model function simple_denoising_model_unknown_Q_R(y)

# put priors on Q and R

Q ~ InverseGamma(2, 0.01)

R ~ InverseGamma(2, 0.01)

x[1] ~ Normal(mean = 0 , variance = Q)

y[1] ~ Normal(mean = x[1], variance = R)

for t in 2:length(y) # we can use length(y) because we already have data associated with y

x[t] ~ Normal(mean = x[t-1], variance = Q)

y[t] ~ Normal(mean = x[t] , variance = R)

end

end

Initialization and Constraints

To enable inference, we set up initial distributions for the latent states $ x_t $ and the noise variances $ Q $ and $ R $. These initializations act as starting points for the variational inference process and are critical in guiding the model to meaningful results.

The latent states $ x_t $ are initialized with a Normal distribution centered at 0 with variance 1, reflecting a vague assumption about the signal’s amplitude. For the noise covariances:

Process Noise ($ Q $):

- Prior: $ Q \sim \mathcal{IG}(2, 1) $

- Implication: A moderate prior mean for $ Q $ (expected variance of 1) allows the model to capture meaningful changes in the underlying signal without over-smoothing it. The slightly broader scale provides some flexibility for the inference process.

Observation Noise ($ R $):

- Prior: $ R \sim \mathcal{IG}(2, 0.1) $

- Implication: A smaller prior mean for $ R $ (expected variance of 0.1) reflects our belief that the observed data is relatively reliable, while still allowing some noise.

These priors were chosen through trial and error to simulate a pre-calibration step in this example. This highlights the importance of selecting priors that reflect reasonable assumptions about the signal and noise. The specific choices here provide a balance between capturing signal dynamics and trusting the noisy observations.

To simplify the inference process, we also impose a factorization constraint on the posterior distributions of $ x_t $, $ Q $, and $ R $, assuming that they are independent.

# set up initialization for priors

simple_model_initialization = @initialization begin

q(x) = NormalMeanVariance(0.0, 1.0)

q(Q) = InverseGamma(2, 1)

q(R) = InverseGamma(2, 0.1)

end

simple_model_constraints = @constraints begin

q(x, Q, R) = q(x)q(Q)q(R)

end

Constraints:

q(x, Q, R) = q(x)q(Q)q(R)

The run_simple_smoother_unknown_Q_R function performs inference for the denoising model with uncertain $ Q $ and $ R $. It uses the simple_denoising_model_unknown_Q_R defined earlier, along with the initialized priors and factorization constraints.

By providing the noisy observations $ y $ as data, this function estimates the posterior distributions of the latent state $ x $ and the noise covariances $ Q $ and $ R $. The limit_stack_depth option ensures stability during inference for longer time-series data.

function run_simple_smoother_unknown_Q_R(y_data)

result = infer(

model = simple_denoising_model_unknown_Q_R(),

data = (y = y_data,),

initialization = simple_model_initialization,

constraints = simple_model_constraints,

options = (limit_stack_depth = 500, )

)

end

run_simple_smoother_unknown_Q_R (generic function with 1 method)

Now let’s fire up that RxInfer!

result_unknown_Q_R = run_simple_smoother_unknown_Q_R(y_meas)

Inference results:

Posteriors | available for (R, Q, x)

Finally, now that we have the inference results, we extract the posterior means and standard deviations of the latent state $ x_t $ from the model with uncertain $ Q $ and $ R $. Using these values, we compute uncertainty bands that represent the model’s confidence around the denoised signal.

The plot includes:

- The clean sine wave (ground truth),

- The noisy observations,

- The denoised signal obtained when $ Q $ and $ R $ are fixed,

- The denoised signal with uncertain $ Q $ and $ R $,

- An uncertainty ribbon to highlight the variability around the posterior mean.

This visualization showcases the model’s ability to handle uncertainty in noise parameters while providing a denoised signal and quantifying its confidence.

# extract posterior means and standar deviations for x[t] with uncertain Q and R

x_means_unknown_Q_R = mean.(result_unknown_Q_R.posteriors[:x])

x_stds_unknown_Q_R = std.(result_unknown_Q_R.posteriors[:x])

# compute uncertainty bands for x[t] with uncertain Q and R

x_upper_unknown_Q_R = x_means_unknown_Q_R .+ x_stds_unknown_Q_R

x_lower_unknown_Q_R = x_means_unknown_Q_R .- x_stds_unknown_Q_R

# Plot the clean sine wave

plot(

t, x,

label = "clean sine signal",

xlabel = "time (s)",

ylabel = "amplitude",

title = "noisy vs. clean sine wave",

legend = :topleft

)

# Add the noisy signal

plot!(

t, y_meas,

label = "noisy signal"

)

# Add the denoised signal (unknown Q and R)

plot!(

t, x_means_unknown_Q_R,

label = "denoised signal unknown Q and R",

linestyle = :dash

)

# Add the uncertainty ribbon for the denoised signal (unknown Q and R)

plot!(

t, x_means_unknown_Q_R,

ribbon = (x_means_unknown_Q_R .- x_lower_unknown_Q_R, x_upper_unknown_Q_R .- x_means_unknown_Q_R),

label = "uncertainty (unknown Q, R)",

color = :blue,

alpha = 0.2

)

Results

The plot above illustrates the results of applying the Kalman smoother to a noisy sine wave. Key elements of the plot are:

- Clean Sine Signal: The original signal (ground truth), which we aim to recover.

- Noisy Signal: The observed signal corrupted by Gaussian noise.

- Denoised Signal: The output of the Kalman smoother when the noise covariances ($ Q $ and $ R $) are known.

- Denoised Signal (Unknown $ Q, R $): The smoother’s output when the noise covariances are inferred from the data.

- Uncertainty (Unknown $ Q, R $): The shaded region around the denoised signal, showing the standard deviation of the posterior distribution of the hidden state.

Notice how the smoother effectively reconstructs the clean sine wave, even when $ Q $ and $ R $ are inferred, while providing a measure of uncertainty around the estimates. This demonstrates the power of the probabilistic approach in handling noisy observations.

4.4 Using an Actual Audio File for Denoising

To demonstrate the practical application of the Kalman smoother, we transition from synthetic sine wave data to an actual audio file. In this example, we use a track generously provided under a free license by Scandinavianz, titled Tranvik, available for download as an MP3 file on Pixabay.

For the purpose of this exercise, the MP3 file was converted to WAV format using foobar2000 to facilitate processing in Julia. We then load the .wav file directly and extract the audio data along with its sampling rate. This audio data will be processed in subsequent steps, including slicing, noise addition, and denoising using our probabilistic framework. By working with a real-world audio signal, we aim to demonstrate the broader applicability of the Kalman smoother for practical audio enhancement tasks.

using FileIO

using LibSndFile

using SampledSignals

audio_data = load("data/scandinavianz-tranvik-free-download-158258.wav")

sr = audio_data.samplerate;

To focus on a manageable segment of the audio file, we extract a slice between 50 and 55 seconds. Using the sampling rate (sr), we calculate the corresponding sample indices and select the desired portion of the audio for further processing.

# Define the time slice (in seconds)

t_start = 50.0 # Start time

t_end = 55.0 # End time

# Calculate the sample indices

start_sample = Int(t_start * sr) + 1 # Add 1 because Julia uses 1-based indexing

end_sample = Int(t_end * sr)

# Extract the slice

audio_slice = audio_data[start_sample:end_sample, :]

audio_slice = SampleBuf(Float32.(audio_slice.data), sr)

Let’s check the audio dimensions.

println("Complete audio dimensions: ", size(audio_data))

println("Audio slice dimensions: ", size(audio_slice))

Complete audio dimensions: (6208751, 2)

Audio slice dimensions: (220500, 2)

The extracted audio slice is averaged across channels to produce a mono signal. This simplifies the analysis by reducing the audio data to a single channel, while retaining the essential features.

typeof(audio_slice)

SampleBuf{Float32, 2}

audio_mono = mean(audio_slice, dims = 2)[:, 1];

println("Mono audio dimensions: ", size(audio_mono))

Mono audio dimensions: (220500,)

Let’s save the original audio (in mono).

# save the original audio slice

save("data/tranvik_original_mono.wav", audio_mono)

To simulate a noisy environment, Gaussian noise is added to the mono audio slice. The noise is generated with a standard deviation of 0.01 for controlled perturbation. A fixed random seed ensures reproducibility of results. The dimensions of the resulting noisy audio are printed for confirmation.

# add noise to the audio slice

noise_std = 0.01

Random.seed!(42) # for reproducibility

noise = noise_std .* randn(length(audio_mono))

audio_noisy = audio_mono .+ noise

println("Noisy audio dimensions: ", size(audio_noisy))

Noisy audio dimensions: (220500,)

A time vector is generated to correspond to the length of the mono audio slice. The total duration of the slice is calculated using the sampling rate ($ sr $), and the time vector spans from 0 to the end of the audio segment with intervals matching the sampling rate. This will be used for plotting and analysis.

# create a time vector

N = length(audio_mono)

duration = N / sr

t = 0:1/sr:(duration - 1/sr);

Here, we output the original mono audio slice and the audio with added noise for a quick listen.

# original sliced audio in mono

audio_mono

# noisy sliced audio in mono

audio_noisy

Let’s visualize both the original mono audio waveform and the noisy version. The plot provides a clear comparison, showing the added noise as deviations from the clean waveform.

# Plot the original audio waveform

plot(

t, audio_mono,

label = "original audio waveform",

xlabel = "time (s)",

ylabel = "amplitude",

title = "audio waveform",

legend = :topleft

)

# Add the noisy audio waveform

plot!(

t, audio_noisy,

label = "noisy audio waveform",

linestyle = :dash,

lw = 0.3 # Line width for the dashed noisy waveform

)

Adjusting $ Q $ and $ R $ for Audio Denoising

To adapt the denoising model to the audio data, we redefine the state-space model to incorporate priors for the noise covariances $ Q $ and $ R $. Here:

- $ Q $ and $ R $ are modeled as inverse gamma distributions with adjusted hyperparameters, reflecting our belief about the variability of the state and observation noise.

- The hidden state $ x_t $ represents the clean audio signal, evolving as a random walk.

- The observations $ y_t $ correspond to the noisy audio samples.

We initialize the priors for $ Q $ and $ R $ with slightly less vague inverse gamma distributions ($ \text{shape} = 2, \text{scale} = 0.1 $), ensuring the model can effectively capture the signal’s characteristics while accounting for uncertainty.

# adjust Q and R

@model function audio_denoising_model_unknown_Q_R(y)

# put priors on Q and R

Q ~ InverseGamma(10, 0.001)

R ~ InverseGamma(10, 0.001)

x[1] ~ Normal(mean = 0 , variance = Q)

y[1] ~ Normal(mean = x[1], variance = R)

for t in 2:length(y) # we can use length(y) because we already have data associated with y

x[t] ~ Normal(mean = x[t-1], variance = Q)

y[t] ~ Normal(mean = x[t] , variance = R)

end

end

# set up initialization for priors

audio_model_initialization = @initialization begin

q(x) = NormalMeanVariance(0.0, 1.0)

q(Q) = InverseGamma(2, 0.01)

q(R) = InverseGamma(2, 0.1)

end

audio_model_constraints = @constraints begin

q(x, Q, R) = q(x)q(Q)q(R)

end

Constraints:

q(x, Q, R) = q(x)q(Q)q(R)

Let’s run that Rxinfer again!

function run_audio_smoother_unknown_Q_R(y_data)

result = infer(

model = audio_denoising_model_unknown_Q_R(),

data = (y = y_data,),

initialization = audio_model_initialization,

constraints = audio_model_constraints,

options = (limit_stack_depth = 500, )

)

end

result_audio = run_audio_smoother_unknown_Q_R(audio_noisy)

Inference results:

Posteriors | available for (R, Q, x)

# extract posterior means and standar deviations for x[t] with uncertain Q and R

denoised_audio_means = mean.(result_audio.posteriors[:x])

denoised_audio_stds = std.(result_audio.posteriors[:x])

# compute uncertainty bands for x[t] with uncertain Q and R

audio_upper = denoised_audio_means .+ denoised_audio_stds

audio_lower = denoised_audio_means .- denoised_audio_stds

# Plot the original audio waveform

plot(

t, audio_mono,

label = "original audio waveform",

xlabel = "time (s)",

ylabel = "amplitude",

title = "audio waveform",

lw = 0.3,

legend = :topleft

)

# Add the noisy audio waveform

plot!(

t, audio_noisy,

label = "noisy audio waveform",

linestyle = :dash,

lw = 0.3

)

# Add the denoised signal

plot!(

t, denoised_audio_means,

label = "denoised signal unknown Q and R",

linestyle = :dashdot,

lw = 2

)

# Add uncertainty ribbon

plot!(

t, denoised_audio_means,

ribbon = (denoised_audio_means .- audio_lower, audio_upper .- denoised_audio_means),

color = :blue,

alpha = 0.2,

label = "uncertainty (unknown Q, R)"

)

The plot above displays the results of applying the Kalman smoother to denoise the audio signal. It includes:

- Original Audio Waveform: The clean audio slice from the original recording (blue).

- Noisy Audio Waveform: The noisy version of the audio after adding Gaussian noise (red, dashed line).

- Denoised Signal: The inferred clean signal using the Kalman smoother with uncertain $ Q $ and $ R $ (green, dashed line).

- Uncertainty Ribbon: The uncertainty bands around the denoised signal, computed as one standard deviation above and below the posterior means (shaded region).

From the plot, it is evident that the denoised signal closely follows the original audio waveform, reducing noise while maintaining the underlying structure of the signal. The uncertainty bands provide additional insight into the variability and confidence of the inferred signal at each time step.

Next, we save the audio files and load them so we can compare how they sound.

FileIO.save("data/tranvik_denoised.wav", Float32.(denoised_audio_means); samplerate = sr)

FileIO.save("data/tranvik_noisy.wav", audio_noisy)

load("data/tranvik_denoised.wav")

load("data/tranvik_noisy.wav")

By listening to the noisy and denoised audio files, it is clear that the denoising process significantly reduces the added noise, bringing the signal closer to its original form. While some residual noise remains, the improvement is noticeable, showing the effectiveness of the probabilistic approach using the Kalman smoother. This result showcases the balance between denoising and preserving the original audio characteristics, even with uncertain noise covariances.

5. Conclusion

In this project, we explored how probabilistic state-space modeling combined with the Kalman smoother can be effectively used for audio denoising. Starting with a simple sinusoidal example, we demonstrated the importance of properly defining model parameters and priors, and then extended the approach to a real-world audio signal.

While the results were not perfect (some noise remained) this example highlights the power and flexibility of Bayesian inference for handling uncertainty and reconstructing signals. With further tuning or more sophisticated models, this framework can be extended to tackle even more complex audio processing tasks. Whether for music production, speech enhancement, or general signal analysis, the tools demonstrated here open the door to a range of exciting possibilities.